Recently I built a new system with the primary intention of running Linux the vast majority of the time and never having to stop what I am doing to reboot into Windows every time I wanted to play a game. That meant gaming in a VM, which in turn meant VGA passthrough. I am an Enterprise Linux 6 user, and Fedora is too bleeding edge for me. What I really wanted to run is KVM virtualization, but the support for VGA passthrough didn’t seem to work for me with EL6 packages, even after a selective update to much newer kernel, qemu and libvirt related packages. VMware ESX won’t work with PCI passthrough on my EVGA SR-2 motherboard because EVGA, in their infinite wisdom, decided to put all the PCIe slots behind Nvida NF200 routers/bridges which don’t support PCIe ACS functionality, which ESX requires for PCI passthrough. That left me with Xen as the only remaining option. I now mostly have Xen working the way I want – not without issues, but I will cover virtualized gaming and Xen details in another article. For now, what matters is that Xen VGA passthrough currently only works with ATI cards and Nvidia Quadro (but not GeForce) cards.

ATI cards are not an option for me due to various driver bugs (e.g. handling monitors on which refresh rate is dependant on resolution due to bandwidth limitations), lack of features (no option to use anything but EDID modes, to the extent of completely ignoring monitor driver .inf files; the custom mode feature used to exist in the drivers (the documentation for it can still be found on the AMD website) but has been removed at some point) and most importantly, lack of multiple DL-DVI outputs on cards more recent than the Radeon HD4xxx series (Radeon HD5xxx and later cards only come with a single DL-DVI port – on those that come with a second DVI port, even though it physically looks like a DL, it only provides a single link).

Nvidia GeForce cards don’t work in a virtual machine, at least not without unmaintained patches that don’t work with all cards and guest operating systems.

That leaves Nvidia Quadro cards. Unfortunately, those are eyewateringly expensive. But, on paper, the spec lists the same GPUs used on GeForce and Quadro cards. This got me looking into what makes a Quadro a Quadro and a few days of research and a weekend of experimentation yielded some interesting and very useful results. While it looks like some features such as certain GL functions are disabled in the chips (probably by laser cutting), some features are purely down to the driver deciding whether to enable them or not. It turns out, making cards work in a VM is one of the driver-depentant features.

Phase 1: Verify That Quadros Cards Work in a VM When GeForce Don’t

Looking at the specification and feature list of Quadro cards, Quadro 2000, 4000, 5000 and 6000 models support the “MultiOS” feature, which is what Nvidia calls VGA passthrough. So, the first thing I did was acquire a “cheap” second hand quadro Quadro 2000 on eBay. Cheap here being a relative term because a second hand Quadro costs between 3 and 8 times the amount the equivalent (and usually higher specification) GeForce card costs. The Quadro card proved to work flawlessly, but the Quadro 2000 is based on a GF106 chip with only 192 shaders, so gaming performance was unusable at 3840×2400 (I will let go of my T221 monitors when they are pried out of my cold, dead fingers). Gaming at 1920×1200 was just about bearable with some detail level reductions, but even so it was borderline.

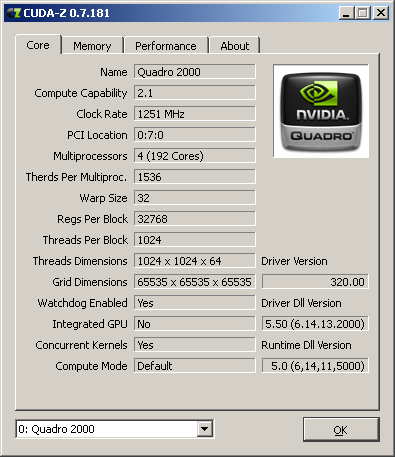

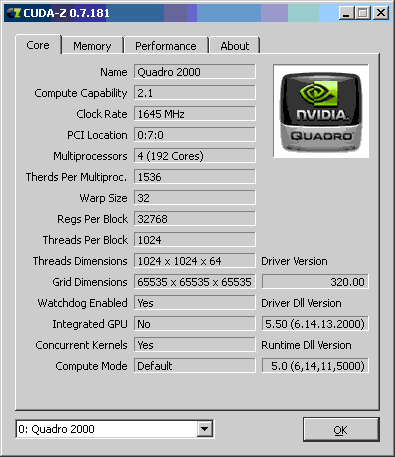

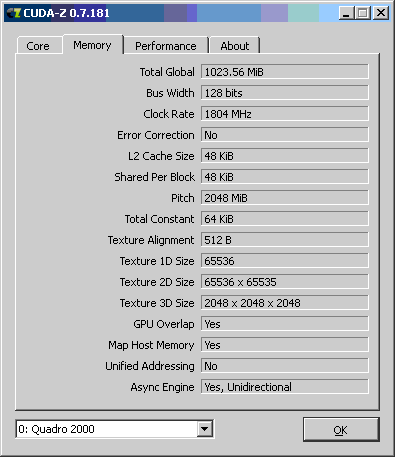

Here is how the genuine Quadro 2000 shows up in GPU-Z and CUDA-Z:

And here are the genuine Quadro 2000 SPECviewperf11 results:

| VIEWSET | COMPOSITE |

|---|---|

| catia-03 | 23.86 |

| ensight-04 | 16.63 |

| lightwave-01 | 43.12 |

| maya-03 | 36.25 |

| proe-05 | 7.07 |

| sw-02 | 32.21 |

| tcvis-02 | 18.82 |

| snx-01 | 17.50 |

Phase 2: Get an Equivalent GeForce Card and Investigate What Makes a Quadro a Quadro

The next item on the acquisition list was a GeForce GTS450 card. On paper the spec for a GTS450 is identical to a Quadro 2000:

GF106 GPU

192 shaders

1GB of GDDR5

Note: There are some models that are different despite also being called GTS450. Specifically, there is an OEM model that only has 144 shaders, and there is a model with 192 shaders but with GDDR3 memory rather than GDDR5. The DDR3 model may be more difficult to modify due to various differences, and the 144 shader model may not work properly as a Quadro 2000.

Armed with the information I dug out, I set out to modify the GTS450 into a QuadForce (a splice between a Quadro and a GeForce – and Gedro just doesn’t sound right). This was successful, and the card now detected as a Quadro 2000, and everything seemed to work accordingly. The VGA passthrough worked, and since the GTS450 is clocked significantly higher than the Quadro 2000, the gaming performance was improved to the point where 1920×1200 performance was quite livable with. What didn’t improve to Quadro levels is OpenGL performance of certain functions that appear to have been disabled on the GeForce GPUs. Consequently, SPECviewperf11 results are much lower than on a real Quadro 2000 card, but the GeForce GTS450 scores higher on every gaming test since games don’t use the missing functionality, and the GeForce card is clocked higher. It is unclear at the moment whether the extra GL functionality was disabled on the GPU die by laser cutting or whether it is disabled externally to the GPU, e.g. by different hardware strapping or pin shorting via the PCB components – more research into this will need to be done by someone more interested in those features than me. Since the stamped-on GPU markings are different between the GTS450 (GF106-250, checked against 3 completely different GDDR5 GTS450 cards) and the Quadro 2000 (GF106-875 on the one I have), it seems likely the extra GL functionality is laser cut out of the GPU.

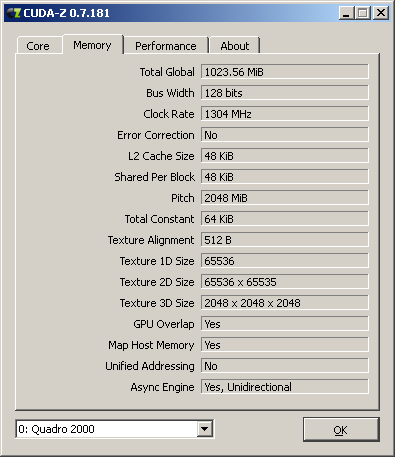

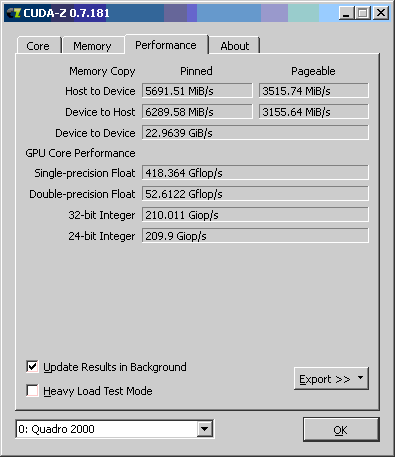

Here is how the GTS450 modified to Quadro 2000 shows up in GPU-Z and CUDA-Z:

CUDA-Z performance seems to scale with the clock speeds, so the faux-Quadro card wins.

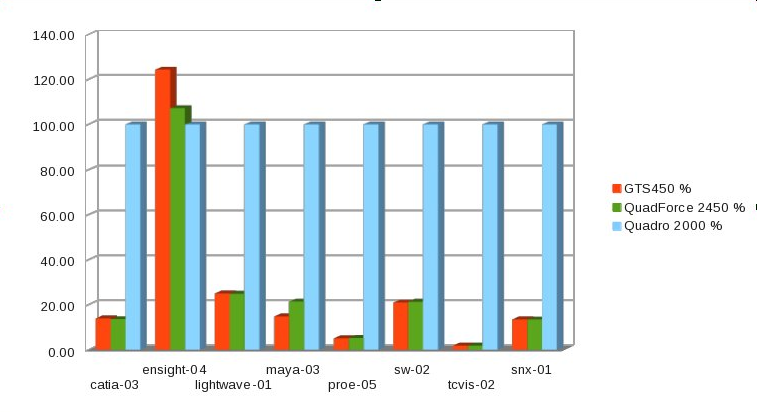

Here are the SPECviewperf11 results for a GTS450 before and after modifying it into a Quadro 2000. As you can see, in this test those missing GL functions make a huge difference, but in some tests there is still a substantial improvement:

GTS450:

| VIEWSET | COMPOSITE |

|---|---|

| catia-03 | 3.33 |

| ensight-04 | 20.67 |

| lightwave-01 | 10.80 |

| maya-03 | 5.38 |

| proe-05 | 0.36 |

| sw-02 | 6.75 |

| tcvis-02 | 0.35 |

| snx-01 | 2.37 |

QuadForce 2450:

| VIEWSET | COMPOSITE |

|---|---|

| catia-03 | 3.24 |

| ensight-04 | 17.83 |

| lightwave-01 | 10.72 |

| maya-03 | 7.75 |

| proe-05 | 0.37 |

| sw-02 | 6.87 |

| tcvis-02 | 0.35 |

| snx-01 | 2.35 |

Here is the data in chart form (relative performance, real Quadro 2000 = 100%).

As you can see the real Quadro dominates in all tests except ensignt-04 where it gets soundly beaten by the GeForce card. Modification does seem to improve some aspects of performance. In particular, Maya results seem to improve by a whopping 44% following the modification.

If you are only interested in support and VGA passthrough for virtual machines, modifying a GeForce card to a Quadro can be an extremely cost effective solution (especially if your budget wouldn’t stretch to a real Quadro card anyway). If you are only interested in performance of the kind measured by SPECviewperf, then depending on the applications you use, a real Quadro is still a better option in most cases.