Following my research for the previous article about the performance of SD/CF/USB flash modules, the only conclusion I could reach is that most of them are pretty dire. The only notable exception among the SD cards seems to be the latest generation of the SanDisk Extreme Pro (95MB/s) cards that just about managed to squeeze out enough performance on random writes to match a 7200rpm disk. Still, this is pretty dire compared to any reasonable SSD, so I wanted to see what else could be done about installing extra storage with good performance into an AC100.

What I came across is this: SuperTalent RC8 USB stick. It may look like a USB stick, but it is actually a full-on SSD, featuring a SandForce 1200 flash controller. I figured this was worth a shot, even though the4 specifications indicate it is rather large (far too large to fit inside an AC100 in it’s standard form). Stripped out of the casing, however, it looks like RC8 might just be fittable inside the AC100.

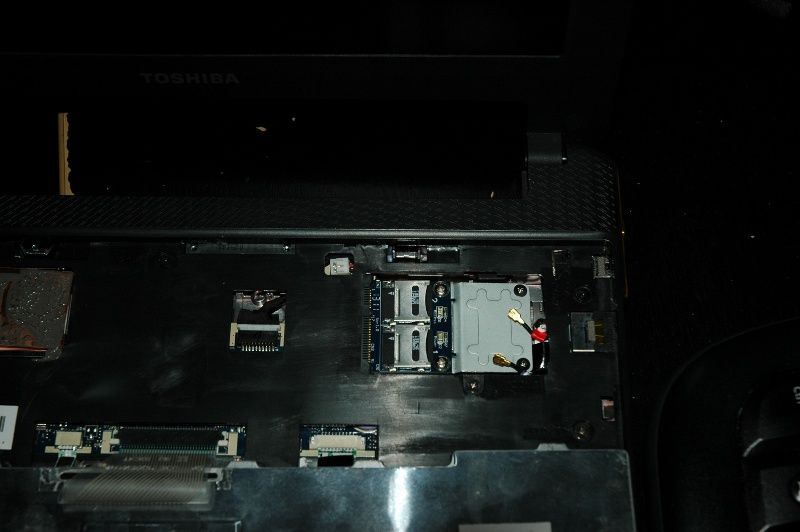

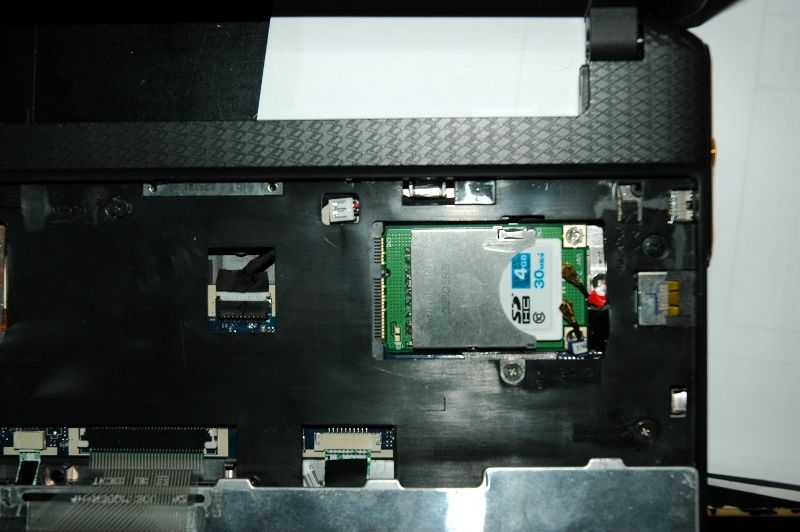

This is what I ended up with. There appears to be only one place inside an AC100 where a bare RC8 circuit board could be fitted. You will need the following:

1) P3MU mini-PCIe USB break-out module

3) Custom made USB cable (male and female type A USB connectors, some single core wire, and some skill with a soldering iron)

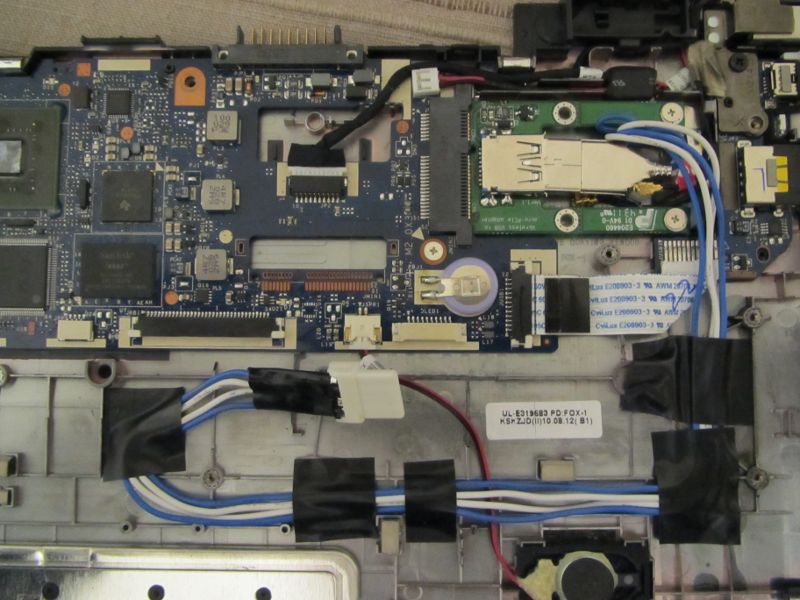

Measure out exactly how long you need the cable to be – there is no room to tuck away excess able inside an AC100. Here is what my cable layout ended up looking like.

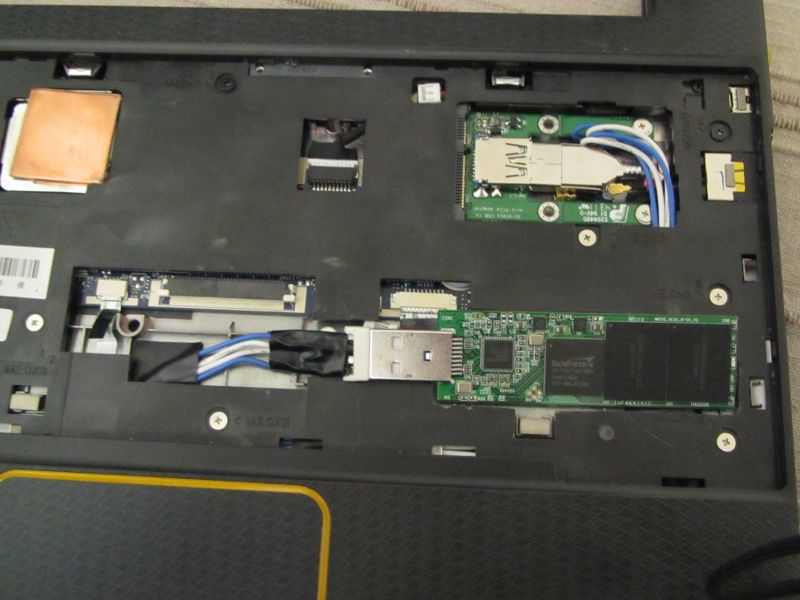

This is what it looks like with the top panel fitted. Note the large cut-out that has been made below the mini-PCIe slot access hole.

And again with the screws fitted. Note that one of the screw holes is in the area that had to be cut out. This shouldn’t affect the structural integrity of the AC100, though. Also note that the right speaker cable has been re-routed slightly to now go over the LED ribbon cable.

This is what it looks like with the RC8 attached. Now you can see why the cut-out in the top panel was exactly the shape it was – I specifically cut out the minimum possible amount to allow the RC8 to fit.

I also put a piece of thin transparent sticky tape over it to hold in in place, just to make sure nothing can short out against the underside of the keyboard.

And that is pretty much it. Put the keyboard back in and bolt it all together. The metal part of the USB connector will sit a tiny bit above the line of the panel, but the only way you’ll notice it once you put the keyboard back on is by knowing that there is a tiny bulge there.

Your AC100 should now be able to handle ~ 2000 IOPS on both random reads and random writes, along with much better life expectancy that having proper flash management brings.

At this point I would like to point out just how impressed I am with the SuperTalent RC8 USB SSD. Not only is the performance fenomenal (for a USB stick at least), but it really behaves like a SATA SSD – to the point where you can use tools like hdparm and smartctl on it (yes, it even supports SMART).